The BitNetMCU initiative streamlines the development of highly accurate neural networks for basic microcontrollers, such as the CH32V003, the famous 10 cents RISC-V MCU from WCH. Although recent attention has been on sophisticated AI tools like large language models and image generators, simpler AI applications are proving valuable across various sectors. For example, TinyML technology is enhancing manufacturing, environmental monitoring, and healthcare. Advancements in hardware are continuously enabling TinyML technologies by reducing size, cost, and power consumption. Additionally, algorithmic improvements are making it feasible for more robust models to operate on minimal resources. Typically designed with accuracy as the priority, these advancements often focus on minimizing model size without sacrificing performance.

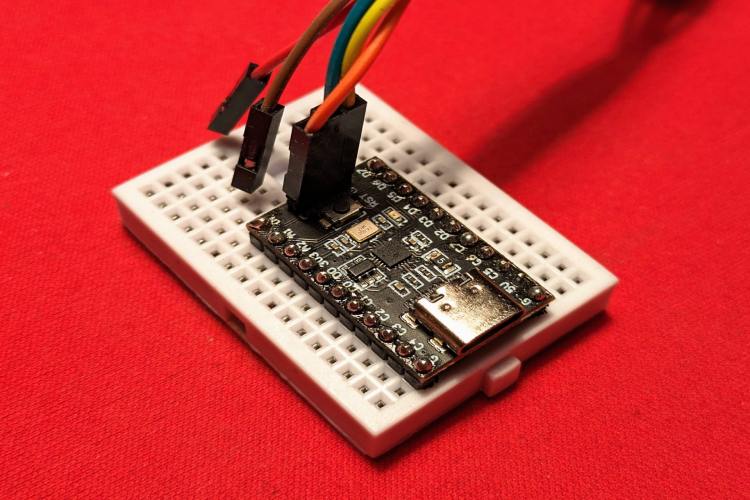

BitNetMCU introduces an approach to facilitate the training and optimization of neural networks for basic microcontrollers. The framework particularly supports low-end RISC-V microcontrollers like the CH32V003, which has limited memory and lacks a hardware multiplier critical for neural network calculations. Despite these limitations, the low cost of the CH32V003 makes it an attractive option for large sensor networks.

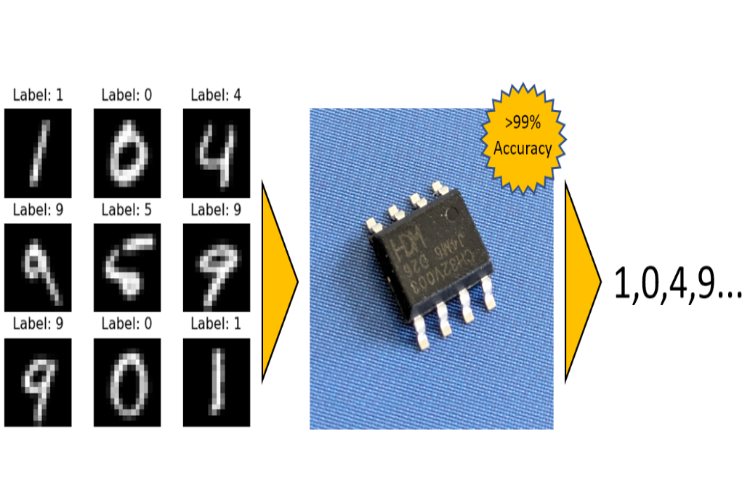

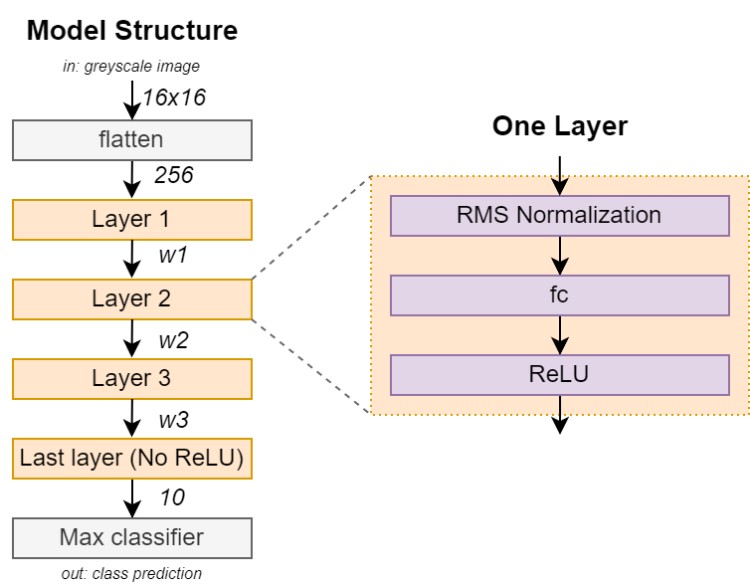

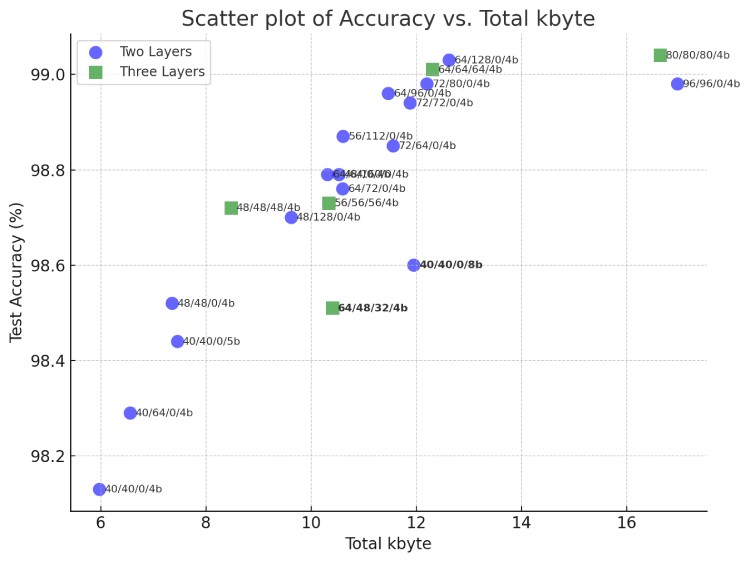

The BitNetMCU system utilizes Python scripts and PyTorch for training neural networks, which are then compressed through low-bit quantization to fit on small microcontrollers. Tools are also provided to test and deploy these models to devices like the CH32V003. The framework’s inference engine is built in ANSI C, ensuring compatibility across various hardware platforms. In tests, a model for recognizing handwritten digits using the 16x16 MNIST dataset was effectively miniaturized to operate within the CH32V003’s memory constraints. Despite the absence of a multiplication instruction, the model maintained an accuracy above 99% by employing multiple additions instead.

The project’s resources, including the source code and comprehensive documentation, are available on GitHub. This makes BitNetMCU an accessible option for those interested in exploring the potential of TinyML with minimal investment. The project documentation highlights the focus on efficient training and inference of quantized neural networks on low-end microcontrollers, achieving high accuracy with optimized use of memory and processing capabilities.